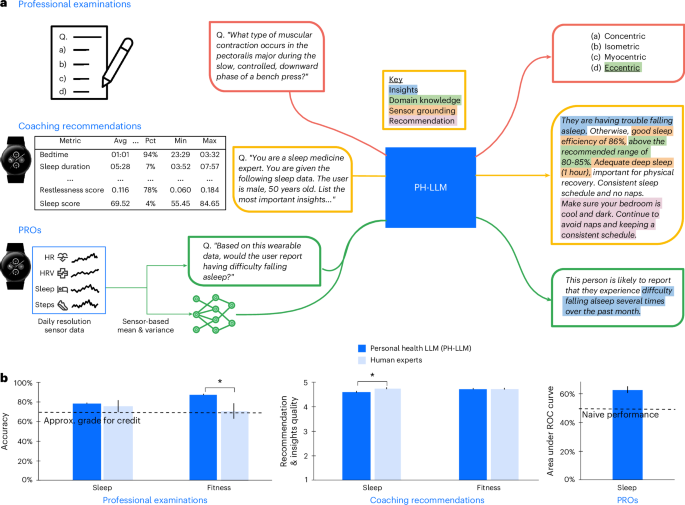

Owing to the absence of clearly defined language and multimodal datasets in the domain of personal health, we created datasets and associated tasks to evaluate different capabilities of PH-LLM. These datasets include professional examinations that test domain knowledge about sleep medicine and fitness, case studies about real-world coaching recommendations and PROs about sleep.

Professional examination dataset creation

Sleep medicine examinations: we compiled 629 MCQs from BoardVitals (https://www.boardvitals.com/) sleep medicine board review question banks. We used the American Medical Association (AMA) Physician’s Recognition Award (PRA) ‘Category 1 – Sleep Medicine’ question bank, which emulates examination content for the American Board of Internal Medicine (ABIM) Sleep Medicine Certification Exam. We also used the Sleep Medicine Maintenance of Certification (MOC) Exam and Longitudinal Knowledge Assessment Review question bank, which emulate examination content for the ABIM Sleep Medicine MOC Exam and the ABIM Longitudinal Knowledge Assessment. This compiled set of MCQs spanned a wide range of sleep-related topics: Normal Sleep and Variants (N = 127), Breathing Disorders (N = 84), Hypersomnolence (N = 60), Insomnias (N = 85), Movement Disorders (N = 23), Parasomnias (N = 57), Sleep in Other Disorders (N = 112) and Sleep-Wake Timing (N = 81).

A subset of 204 questions was selected for evaluation by sleep experts. Sampling was performed randomly to ensure similar distributions to the entire set of MCQs when stratified based on medical content categories (https://www.abim.org/Media/aypkdxpi/sleep-medicine.pdf) and their difficulty levels.

Fitness examinations: we compiled 99 MCQs sourced from multiple question banks that emulate examination content for the CSCS examination preparation book that the NSCA provides (https://www.nsca.com/certification/cscs/certified-strength-and-conditioning-specialist-exam-description). We used the test examination questions from the NSCA-CSCS textbook Essentials of Strength Training and Conditioning.

Accuracy was used as the metric to evaluate the performance of our model in professional examinations, in line with previous work evaluating MedMCQA44. Each question presents up to five possible answers, with a single correct answer. MCQs were not used for model training. All samples were used in evaluation.

Coaching recommendations dataset creation

Each of the 857 case studies was sampled from anonymized Fitbit production data from individuals who provided consent for research purposes. Case studies were designed to interpret a range of physiological sensor information toward deriving insights, potential causes or recommendations for future behaviors.

Creation of case studies consisted of four main parts, each developed in close collaboration with domain experts: identifying the primary goals of the case studies, selecting case study input features, sampling individuals for inclusion in case studies and creating gold standard case study responses.

Domain experts in sleep and fitness each consisted of ‘primary’ and ‘secondary’ contributors to case study response creation and evaluation. This categorization was based on each expert’s general availability; ‘primary’ contributors had more involvement and higher workload volumes. The level of domain expertise was similar across the two groups. The sleep and fitness verticals were each led by a clinical expert in their respective fields. The clinical lead oversaw case study development and provided feedback and quality control to the set of domain experts.

Sleep case study dataset creation

We recruited six domain experts, all of whom possessed advanced degrees (MD, DO or PsyD) in sleep medicine and professional sleep medicine work experience ranging from 4 years to 46 years. All experts were trained to read and interpret wearable data and map outputs to their corresponding sleep medicine literature counterparts.

Primary goals: the sleep case studies aimed to enhance understanding of sleep patterns, identify causes of irregular sleep and offer actionable recommendations based on these findings, with the ultimate objective of enhancing the sleep quality of the individual.

Input feature selection: each sleep case study incorporated demographic information (age and self-reported gender), daily sleep metrics (for example, bedtimes, wake times and sleep stage durations) and aggregated sleep statistics (for example, average bedtime) (Fig. 2a). The daily sleep metrics contained up to 29 days of data across 16 metrics (Supplementary Table 26). The aggregated sleep statistics included multiple summary measures for each daily metric computed over all the days, the percentile that the aggregated metric is in as compared to other individuals within the same demographic group and the 5th and 95th percentiles of the aggregated metric as compared to other individuals within the same demographic group. For some metrics, such as bedtime, the metrics were computed separately for all days, weekdays only and weekends only to understand weekday versus weekend patterns (Supplementary Table 27).

Sample selection: the dataset was sampled to enrich for non-missingness and achieve a representative group across age and self-reported gender (Extended Data Fig. 10a and Supplementary Table 25). We first limited the data to individuals with at least 28 sleep events within the 29-day window. We then sampled from 64 different demographic groups, determined by a combination of 32 different age buckets (13–20 years old and 20–80 years old, with each group within this range spanning 2 years, and 80 years old and above) and two gender buckets (male and female). Each case study (N = 507) was created from a unique individual. The distribution of the number of days for which data are available in the sleep case studies is presented in Supplementary Fig. 12a.

Gold standard response creation: experts were instructed to interpret the case study input data to craft guidance in the second-person narrative, fostering a direct and personalized dialogue with the user. The case study input data were presented to the experts in both graphical and tabular formats for ease of analysis (Fig. 2a and Supplementary Tables 26 and 27). The experts composed responses across the following sections:

Insights: implicitly, this section answered the question of ‘What are some sleep-related insights based on my data?’ The sleep medicine expert examined the data and provided an interpretation of whether a data point might represent an atypical sleep pattern. The experts were asked to systematically review each case to provide a holistic assessment of the user’s sleep patterns. To do so, Fitbit sleep metrics were assessed according to the validated Routine, Sleep Quality, Alertness, Timing, Efficiency and Duration (RU-SATED) framework to generate sleep insights45.

Etiology: implicitly, this section answered the question of ‘What are the possible underlying causes that could explain the observed data?’ The experts generally considered the contribution of circadian rhythm, homeostatic drive, psychophysiologic hyperarousal and extrinsic factors and indicated their likelihood.

Recommendations: implicitly, this section answered the question of ‘What can I do to improve my sleep?’ The experts were asked to provide personalized recommendations to the individual that can help them improve their sleep by addressing potential causes identified in the etiology section. The experts were instructed to create recommendations that are Specific, Measurable, Achievable, Relevant and Time-bound (SMART)46.

Fitness case study dataset creation

We recruited seven domain experts in fitness to analyze an individual’s quantitative fitness data. The seven fitness experts all possessed advanced degrees (MS, MA, MEd or DAT) related to the athletic training field and professional athletic training work experience ranging from 4 years to 25 years.

Primary goals: the fitness case studies were designed to provide a comprehensive analysis of an individual’s training load, sleep patterns and health metrics, with the ultimate objective of synthesizing the metrics into a data-driven assessment of the extent to which the individual is prepared for physical activity today, and to provide associated recommendations.

Input feature selection: similar to the sleep case studies, fitness case study inputs spanned demographic information (age, self-reported gender, height, weight and body mass index (BMI)), wearable sensor daily values for cardiovascular training load, sleep and health metrics and aggregated statistics (Fig. 2b). The quantitative fitness data contain up to 30 days of data.

Sample selection: we enriched for samples containing sufficient activity for interesting training readiness analysis by considering individuals who logged more than 30 lifetime runs and had, within a contiguous 21-day window, mean active zone minutes of at least 45 minutes and, at most, 5 days of missing data for step counts, sleep, heart rate variability and resting heart rates. We further filtered to person-days with at least two logged exercises in the past 28 days and that contained noticeable recent changes in heart rate variability, resting heart rate, sleep, active zone minutes or run activity. Each case study (N = 350) targets a unique person-day combination, with N = 58 unique individuals in the dataset. The distribution of the number of available days for fitness case studies is presented in Supplementary Fig. 12b. The demographics of the individuals in fitness case studies are presented in Extended Data Fig. 10b and Supplementary Table 25 for age and gender and in Supplementary Fig. 13 for BMI, height and weight.

Gold standard response creation: experts were instructed to formulate insights, assessments and recommendations in the second-person narrative. The case study input data were presented to the experts in tabular, text and graphical formats. The experts composed responses across the following sections:

Demographics: the experts considered the demographics data (age, self-reported gender, height, weight and BMI) and commented on whether any precautions should be taken when recommending a fitness program.

Training load: the experts were provided with a detailed table capturing daily metrics over the past 30 days, including day of the week, date, minutes spent in fat-burn, cardio and peak zones, training impulse (TRIMP), which is a training load measure derived from heart rate and exercise duration, and number of steps (Supplementary Table 28). Daily TRIMP values were additionally visualized in a bar plot (Fig. 2b). Aggregate statistics were also provided, including means; ranges; acute TRIMP (7-day total training load), a measure of acute training load; chronic TRIMP (28-day average acute training load), a measure of chronic training load; and acute:chronic workload ratio (ACWR), aiding the assessment of training stress. Recent exercise logs and associated metrics were provided for each exercise entry (Supplementary Table 29).

Sleep metrics: the data included nine daily sleep metrics: day of the week, date, bedtime, wake time, sleep time, awake time, deep sleep duration, rapid eye movement (REM) sleep duration and the sleep score (Supplementary Table 30). Experts were also given aggregated metrics, including means, standard deviations and z-scores, indicating the difference in metrics between the most recent 3 days and the past 28 days to identify recent trends (Supplementary Table 31). Select sleep metrics were additionally visualized (Fig. 2b).

Health metrics: daily data on day of the week, date, resting heart rate, heart rate variability and respiratory rate were provided to assess recovery and stress (Supplementary Tables 32 and 33), along with graphical representations (Fig. 2b). The experts were also given aggregate metrics, such as means, standard deviations, ranges and z-scores, indicating the difference in metrics between the most recent day and the past 28 days (Supplementary Table 34), to gauge changes over time and assess recovery and stress levels effectively.

Assessment and recommendation: the experts integrated multiple data sources to evaluate workout readiness and provide personalized recommendations. They synthesized the most important insights from the previous sections along with simulated user feedback about their subjective state. The synthetically generated user feedback included a qualitative description of subjective readiness (for example, ‘feeling fatigued’) and muscle soreness (for example, ‘manageable soreness’) (Supplementary Tables 35 and 36). The synthetically generated user feedback was generated using Gemini 1.0 Pro (prompts are shown in Supplementary Tables 37 and 38). Based on all available information, the experts assessed the individual’s readiness to work out on a scale of 1 to 5 and provided specific fitness recommendations (Fig. 2b).

Holistic view of case study creation

Sleep and fitness case studies were collected from distinct sets of individuals. For both the sleep and fitness verticals, we generated two sets of data: a dataset used for model training, validation and testing and a holdout dataset that was used only for final evaluation of the model by experts (Extended Data Fig. 1).

To generate the dataset used for training, validation and testing, we first prompted the Gemini family of models with the data for each section in order to generate baseline model (Gemini Ultra 1.0) responses (Extended Data Fig. 1a). The experts then reviewed and rewrote the responses as needed. The dataset also underwent multiple rounds of quality control, engaging the experts and clinical leads. Separately, to generate the holdout dataset, the experts wrote the responses from scratch (without any LLM assistance). This was done to ensure a clearer comparison between experts and the model during final evaluation.

In total, we created 507 case studies for sleep (457 case studies for the training, validation and test set and 50 case studies for the holdout set, all drawn from unique individuals) and 350 case studies for fitness (300 case studies drawn from 48 individuals for the training, validation and test set and 50 case studies drawn from 11 individuals for the holdout set). Data splits for fitness, which sample multiple case studies from single individuals, were created to ensure that person-months were unique between the train and holdout test set. Although a single individual contributes case studies with unique person-months to both the train and holdout splits (N = 5 and N = 3, respectively), excluding these holdout samples did not substantially impact holdout performance (Supplementary Fig. 6). Within each fitness split, case studies from a given individual were selected from distinct days such that target person-days were unique across samples.

Because each case study had multiple sections (three sections for sleep and five sections for fitness), in total we obtained 3,271 question–answer pairs (457 × 3 + 300 × 5 = 2,871 for train/validation/test and 50 × 3 + 50 × 5 = 400 for the holdout evaluation).

PRO dataset creation

To evaluate the ability of PH-LLM to predict PROs from longitudinal passive sensor data, we used a large institutional review board (IRB)-approved study in which wearable data were collected for a population of 10,099 consented individuals for a 4-week period28. At both intake and completion, participants were asked to complete two surveys: the PROMIS short-form Sleep Disruption and Sleep Impairment surveys27, each containing eight questions with answers on a five-point Likert scale (Supplementary Tables 39 and 40). The study thus linked individuals’ perceived sleep quality and its impact on their functioning with longitudinal observed physiological (for example, heart rate and sleep duration) and behavioral (activity) measurements.

We used the completion survey responses for all 16 questions as the basis for prediction and, for each question, defined a binary outcome that compared the highest answer (for example, ‘strongly agree’) against all others (Supplementary Fig. 14). Features used to predict each binary outcome include 20 time-varying wearable measurements (Supplementary Table 41), each of which was collected from study participants over a 4-week span. Although most individuals have sensor data for over 21 days, distributions are heavily left-skewed (Supplementary Fig. 15). To obtain data points with complete labels and standardized input features, we filtered the dataset to retain only individuals who have all completion survey responses and at least 15 days of sensor data (N = 4,163 individuals). For each individual, we kept only the last 15 (possibly non-contiguous) days of sensor data, resulting in a 20 × 15 matrix that represents the wearable sensor data for each research participant over 15 days as well as a vector of 16 binary values as the prediction targets. We performed sensor data quality filtering by replacing values more than 4 s.d. from the population median with their nearest non-outlier values.

In total, we created 4,163 PRO samples, randomly split into 2,914 (70%) training examples, 416 (10%) validation examples and 833 (20%) testing examples. Each sample corresponds to a unique individual and contains labels for 16 binary outcomes, resulting in 46,624 (=2,914 × 16) training, 6,656 validation and 13,328 testing data points with binary labels.

Base model selection

To train PH-LLM from the most capable base model, we performed automated evaluation of several Gemini candidate model sizes and a medical LLM on the professional examination questions. The candidate models were Gemini Nano 1.0, Gemini Pro 1.0, Gemini Ultra 1.0 (ref. 26) and Med-PaLM 2 (ref. 2).

Model prompting to create case study candidate responses

Because Gemini Ultra 1.0 was the most accurate Gemini family base model on professional examinations, suggesting that it has appropriate domain knowledge in the areas of sleep and fitness, we explored the performance of this model on case studies. We prompted Gemini Ultra 1.0 by summarizing guidelines given to the experts for dataset creation. For example, the sleep experts generally were asked to follow the RU-SATED format45 to generate sleep insights. To encourage Gemini Ultra 1.0 to generate high-quality case study responses, we similarly prompted it to follow the RU-SATED format and provided an explanation of what metrics should be used to assess each dimension (see Supplementary Tables 42–49 for details). We note that each case study consisted of multiple sections containing textual representations of aggregated sensor data that represent different queries and responses: three sections for sleep case studies (insights, etiology and recommendations) and five sections for fitness case studies (demographics, training load, sleep, health metrics and the assessment). Because each section represented a different aspect of the case study, we developed prompts specifically for each section (Supplementary Tables 42–44 for sleep; Supplementary Tables 45–49 for fitness). We represented aggregated sensor data as text to mimic observations of how the experts used and interpreted the case study data while creating gold standard responses. For sections that synthesized results from previous sections—that is, the etiology and recommendation sections in sleep case studies and the assessment section in fitness case studies—we substituted the model answers from previous sections into the prompt (Supplementary Table 49).

Training PH-LLM on case studies

We finetuned Gemini Ultra 1.0 on the case studies to create PH-LLM. We used the case studies from the training, validation and test sets for model training and selection (457 case studies from 457 individuals for sleep and 300 case studies from 48 individuals for fitness). For each of the sleep and fitness domains, we randomly split the case studies into separate training, validation and test splits using a 70:15:15 ratio. We used the same text prompts that were given to the baseline model to form prompt–response pairs for model tuning. Because each section was treated as a separate example, this resulted in 1,371 prompt–response pairs for sleep and 1,500 prompt–response pairs for fitness across the training, validation and test sets (Extended Data Fig. 1a,b).

Typically, LLMs are trained on mixtures of tasks47. Here, we finetuned the model on a 1:1 mixture of sleep and fitness prompt–response pairs. Within the fitness prompt–response pairs, we upsampled higher-quality case studies by a 2:1 ratio, where higher-quality case studies were defined as those that underwent additional rounds of quality control by the fitness experts.

The Gemini Ultra 1.0 model, which is based on a transformer decoder26, was finetuned for 1,500 steps with a global batch size of four using linear warmup over 50 steps and cosine decay. Finetuning was performed by minimizing the cross-entropy loss between predicted and target token distributions. We used a learning rate of 2.5 × 10−7, a weight decay of 1 × 10−2 and a learning rate decay minimum ratio of 0.1. We saved model checkpoints every 50 steps. For our final model candidate, we chose the first checkpoint (step = 550) after the model had been trained for at least one epoch. At this point, the validation loss had started to plateau and stabilize (Supplementary Fig. 16).

Training PH-LLM for PROs

Using PH-LLM finetuned on case studies, we further finetuned an adapter for PH-LLM to predict PROs from wearable data. To do so, we used a subset of the Google DWB study dataset28, which collected both wearable data and self-reported outcomes, and we trained the model following the methodology developed in HeLM37. The wearable data of each individual consisted of 20 device measurements over 15 days and were passed to the model through both textual prompt and the adapter. The textual prompt provided the age of the individual, listed the names of 20 device measurements and their mean values across 15 days and asked the model to predict either ‘yes’ or ‘no’ for a specific binary outcome (Supplementary Table 50). For the adapter, we standardized the wearable data by z-scoring using the training data mean and s.d. for each wearable sensor. Then, for each individual, we computed the mean and variance of z-scored sensor values across 15 days for each measurement, yielding a standardized and aggregated 20 × 2 sensor data matrix. This matrix was projected into four ‘soft tokens’ in the PH-LLM token embedding space by an MLP adapter with an input layer of size 40; three hidden layers of sizes 1,024, 4,096 and 1,024, each followed by rectified linear unit (ReLU) activation function; and an output layer of size 4 × 14,336 = 57,344 where 14,336 is the token embedding size of PH-LLM. Finally, these four ‘soft tokens’ were passed to PH-LLM as a prefix to the textual prompt.

The adapter was trained to minimize cross-entropy loss between the predicted and actual binary outcome value while keeping PH-LLM weights frozen. The adapter was trained for, at most, 30,000 steps using a global batch size of eight, a learning rate of 1 × 10−4 with linear warmup over 50 steps and cosine decay. The final checkpoint was selected as the one with the highest AUPRC (averaged over 16 binary outcomes) on the validation dataset.

We compared the predictions on the test dataset from PH-LLM with adapter to those from text-only PH-LLM using either zero-shot or few-shot prompting. For zero-shot, the prompt was almost the same as that for PH-LLM with adapter except that there was no adapter-based soft token prefix and the prompt also listed the variance across 15 days for each raw measurement value. For few-shot, the prompt additionally added three examples (the maximum number of complete examples that fit within the context window). For all three models, positive and negative outcomes were scored by computing the log likelihood for each outcome.

We fit a logistic regression model separately for each binary outcome where the input is the same as that to the PH-LLM adapter. We also trained a CNN using the z-scored wearable data of shape 20 × 15 (whose sensor-based means and variances are the input to the PH-LLM adapter and logistic regression models) as input. The CNN sequentially consists of two convolutional layers with kernel shapes (15, 1) and (1, 1), respectively, each followed by a batch normalization layer and a dropout layer with dropout probability 0.2, two feedforward layers with sizes 64 and 1 and a sigmoid activation at the end. Both convolutional layers have 16 filters, one-step strides and a ReLU activation.

Evaluating PH-LLM on professional examinations

We evaluated model performance on the professional examination dataset using a combination of CoT prompting32 and self-consistency31. For our primary results, we generated five reasoning paths per question and selected the most common response as the final answer. For questions that did not have a unique modal response, we selected the answer option with highest log probability as the final answer.

To assess the ability of PH-LLM to differentiate correct from incorrect answers based on confidence, we used a log probability analysis. For each multiple-choice examination question, we computed the model’s log probability for every answer option and applied softmax normalization to obtain a probability distribution over all answer options. For metric calculation, each answer option was treated as an individual prediction, comparing the model’s normalized log probability against a binary label (1 for a correct response, 0 otherwise). We then computed micro-averaged AUROC and micro-averaged AUPRC across the sleep and fitness examinations independently.

Expert grading of case study responses and consistency

Although evaluation against MCQs and PROs can be performed by comparing model predictions to gold standard structured responses and numerical values, respectively, the case studies involve longer-form outputs. To evaluate these model responses, the domain experts (including all experts involved in creating the case study responses) were asked to evaluate three responses written to each case study: one by Gemini Ultra 1.0, one by PH-LLM and one by a domain expert (Extended Data Fig. 1c). Of the 100 holdout case studies, 94 had at least one single expert rate all three responses (45/50 in sleep and 49/50 in fitness); the remainder had multiple experts contribute ratings. Each domain expert was assigned evaluations randomly to case studies for which they did not write the expert response and were blinded to the source of each response. The domain experts evaluated each case study response based on a custom rubric that quantifies incorporation of user data, appropriate personalization based on user data, use of expert domain knowledge, evidence of confabulations or unwarranted assumptions, potential for harm, readability and overall quality (Supplementary Table 9).

Holdout case studies were fully distributed across the primary group of experts. A portion of the holdout case studies was additionally assigned to the rest of the available domain experts.

To assess inter-rater agreement and analyze differences between primary and secondary rater groups, we introduced overlap across case study rating assignments within each vertical, resulting in 78–1,428 paired ratings within a subset of raters. To evaluate inter-rater reliability, we employed several established metrics: raw counts of agreement, pairwise Spearman’s rank correlation, Kendall’s coefficient of concordance (Kendall’s W), weighted Cohen’s kappa and Gwet’s AC2. Spearman’s correlation and Kendall’s coefficient of concordance are metrics based on comparing ranks of expert ratings. These may be more conservative in the presence of many ties, as in our data with many ratings clustered around 4 and 5 (Extended Data Fig. 4). Weighted Cohen’s kappa measures agreement between raters adjusted for chance agreement. The metric ranges from −1 to 1 with 0 indicating that the agreement among raters is similar to chance. Weighted Cohen’s kappa can exhibit paradoxical behavior (‘Kappa Paradox’), underestimating the true extent of agreement between raters34 in imbalanced datasets such as ours. Gwet’s AC1, and its weighted extension designed for ordinal data, Gwet’s AC2, were designed to deal with class imbalance while adjusting for chance agreement34,35. Qualitative trends for all metrics were consistent across rater pairs in both verticals (Supplementary Figs. 17 and 18).

Automatic evaluation of case study responses

Although expert grading of case study responses was our primary mechanism for assessing model performance, AutoEval allows us to obtain a quick, although potentially less accurate, signal by using secondary models to perform this grading task48.

AutoEval dataset: while exploring different modeling mechanisms, we performed an initial round of expert grading using the rubrics (Supplementary Table 9) and procedure described above for 50 expert-generated case studies from each vertical across three response sources: experts, an untuned Gemini Ultra 1.0 model and a finetuned Gemini Pro 1.0 model. We split these studies into vertical-specific training and validation splits containing approximately 80% (N = 38) and 20% (N = 12) of case studies, respectively. Splits were structured such that samples rated by a given expert were evenly distributed between sets. All ratings associated with a given case study were included in that split, resulting in N = 6,552 total ratings across case study sections and evaluation principles for sleep (N = 4,872 train and N = 1,596 validation) and N = 9,331 for fitness (N = 7,138 train and N = 2,193 validation).

AutoEval examples: using the rated case studies, we constructed LLM prompts and targets. Prompts included a description of the rating task objective for the given case study section, a summary of data describing the case study, the principle being assessed and the principle’s Likert scale options. Each target was the expert-generated rating followed by the rating’s Likert option text description (for example, for a ‘No incorrect domain knowledge’ principle rating of 5, the target is ‘5. No incorrect domain knowledge references exist’.) (Supplementary Table 51).

AutoEval model training: we created AutoEval rater models by finetuning the smaller Gemini Pro 1.0 model26 using LoRA49 across a variety of vertical-specific data mixtures, including all ratings for a vertical and all ratings from a single rater. All AutoEval modeling experiments used a fixed set of hyperparameters, varying only the training data mixture: a LoRA rank of 4 on attention heads, random Gaussian initialization for LoRA’s A matrix and zero initialization for LoRA’s B matrix. Models were tuned to minimize the cross-entropy loss between predicted and target token distributions using the Adafactor optimizer50 with a constant learning rate of 2 × 10−5, a β1 of 0.95, a decay of 0.995 and a gradient norm clipping threshold of 1.0. None of warmup, weight decay or quantization was used. Models were tuned for a maximum of 20 epochs with a global batch size of 32. Checkpoints were taken four times per epoch, and the best checkpoint was selected by minimizing validation set log perplexity. The total number of steps varied per model because each training mixture contained a different number of samples depending on the vertical and rater (Supplementary Table 52).

AutoEval model selection: we evaluated four candidate AutoEval models for each vertical. An untuned Gemini Pro 1.0 model served as a baseline. The other three AutoEval candidates were trained identically using different data mixtures: ‘All’ contained all ratings in the vertical; ‘High variance’ contained ratings from only the expert rater with highest rating variance (raters ‘Sleep Primary C’ and ‘Fitness Primary C’); and ‘Low variance’ contained ratings from only the expert rater with lowest rating variance (raters ‘Sleep Primary D’ and ‘Fitness Primary B’). We generated model predictions by scoring the likelihood of each Likert option given the input prompt, converted these scores into five-class multinomial probabilities and chose the option with the largest probability score. We selected the best AutoEval model for each vertical using a combination of log perplexity loss and Spearman’s rank correlation between predictions and the ground truth ratings in the validation dataset (referred to as ‘best AutoEval’ models).

AutoEval inference: given case study candidate responses, we used the Likert scoring procedure described above to automatically rate outputs across case study sections and evaluation principles with our best AutoEval models.

Statistical analyses

Confidence intervals (95%) were determined via bootstrapping with 1,000 iterations. Statistical significance of expert ratings was determined using a two-sided Wilcoxon rank-sum test with false discovery rate (FDR) (Benjamini–Hochberg) correction when multiple sections or multiple evaluation principles were analyzed together. All P values refer to P values after FDR correction. For each P value, we report the test statistic Z and the effect size \(r=Z/\sqrt{N}\), where N is the total sample size of the test.

Inclusion and ethics

All individuals who fulfilled the criteria for authorship required by Nature Portfolio journals have been included as authors. This work includes findings that are locally relevant, which have been determined in collaboration with local partners. Roles and responsibilities were agreed among collaborators ahead of the research. This research was not severely restricted or prohibited in the setting of the researchers. Ethics approval for the case studies was granted by the Western Institutional Review Board-Copernicus Group IRB, which classified the research as exempt under 45 CFR 46.104(d)(4). Ethics approval for the Digital Wellbeing Study was granted by the IRB of the University of Oregon. This research does not result in stigmatization, incrimination, discrimination or personal risk to participants. Local and regional research relevant to our study was taken into account in citations.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.